Hadoop’s Inherent Advantages Over RDBMS for DW / BI; Especially in the “Big Data” Era

By David Teplow

[NOTE: This article was originally published in The Data Warehousing Institute’s quarterly BI Journal in March 2013. It was also one of two articles chosen from 2013 to be republished in TDWI’s annual Best of BI in February 2014.]

Background

Relational database management systems (RDBMS) were specified by IBM’s E.F. Codd in 1970 and first commercialized by Oracle Corporation (then Relational Software, Inc.) in 1979. Since that time, practically every database has been built using an RDBMS—either proprietary (Oracle, SQL Server, DB2, and so on) or open source (MySQL, PostgreSQL). This was entirely appropriate for transactional systems that dealt with structured data and benefitted when that data was normalized.

In the late 1980s, we began building decision support systems (DSS)—also referred to as business intelligence (BI), data warehouse (DW), or analytical systems. We used RDBMS for these, too, because it was the de facto standard and essentially the only choice. To meet the performance requirements of DSS, we denormalized the data to eliminate the need for most table joins, which are costly from a resource and time perspective. We accepted this adaptation (some would say “misuse”) of the relational model because there were no other options—until recently.

Relational databases are even less suitable for handling so-called “Big Data.” Transactional systems were designed for just that—transactions; data about a point in time when a purchase occurred or an event happened. Big Data is largely a result of the electronic record we now have about the activity that precedes and follows a purchase or event. This data includes the path taken to a purchase—either physical (surveillance video, location service, or GPS device) or virtual (server log files or clickstream data). It also includes data on where customers may have veered away from a purchase (product review article or comment, shopping cart removal or abandonment, jumping to a competitor’s site), and it certainly includes data about what customers say or do as a result of purchases or events via tweets, likes, yelps, blogs, reviews, customer service calls, and product returns. All this data dwarfs transactional data in terms of volume, and it usually does not lend itself to the structure of tables and fields.

The Problems with RDBMS for DW / BI

To meet the response-time demands of DSS, we pre-joined and pre-aggregated data into star schemas or snowflake schemas (dimensional models) instead of storing data in third normal form (relational models). This implied that we already knew what questions we would need to answer, so we could create the appropriate dimensions by which to measure facts. In the real world, however, the most useful data warehouses and data marts are built iteratively. Over time, we realize that additional data elements or whole new dimensions are needed or that the wrong definition or formula was used to derive a calculated field value. These iterations entail changes to the target schema along with careful and often significant changes to the extract-transform-load (ETL) process.

The benefit of denormalizing data in a data warehouse is that it largely avoids the need for joining tables, which are usually quite large and require an inordinate amount of machine resources and time to join. The risk associated with denormalization is that it makes the data susceptible to update anomalies if field values change.

For example, suppose the price of a certain item changes on a certain date. In our transactional system, we would simply update the Price field in the Item table or “age out” the prior price by updating the effective date and adding a new row to the table with the new price and effective dates. In our data warehouse, however, the price would most likely be contained within our fact table and replicated for each occurrence of the item.

Anomalies can be introduced by an update statement that misses some occurrences of the old price or catches some it shouldn’t have. Anomalies might also result from an incremental data load that runs over the weekend and selects the new price for every item purchased in the preceding week when, in fact, the price change was effective on Wednesday (which may have been the first of the month) and should not have been applied to earlier purchases.

With any RDBMS, the schema must be defined and created in advance, which means that before we can load our data into the data warehouse or data mart, it must be transformed—the dreaded “T” in ETL. Transformation processes tend to be complex, as they involve some combination of deduplicating, denormalizing, translating, homogenizing, and aggregating data, as well as maintaining metadata (that is, “data about the data” such as definitions, sources, lineage, derivations, and so on). Typically, they also entail the creation of an additional, intermediary database—commonly referred to as a staging area or an operational data store (ODS). This additional database comes with the extra costs of another license and database administrator (DBA). This is also true for any data marts that are built, which is often done for each functional area or department of a company.

Each step in the ETL process involves not only effort, expense, and risk, but also requires time to execute (not to mention the time required to design, code, test, maintain, and document the process). Decision support systems are increasingly being called on to support real-time operations such as call centers, military intelligence, recommendation engines, and personalization of advertisements or offers. When update cycles must execute more frequently and complete more rapidly, a complex, multi-step ETL process simply will not keep up when high volumes of data arriving at high velocity must be captured and consumed.

The Problems with Big Data

Big Data is commonly characterized as having high levels of volume, velocity, and variety. Volume has always been a factor in BI/DW, as discussed earlier. The velocity of Big Data is high because it flows from the so-called “Internet of Things,” which is always on and includes not just social media and mobile devices but also RFID tags, Web logs, sensor networks, on-board computers, and more. To make sense of the steady stream of data that these devices emit requires a DSS that, likewise, is always on. Unfortunately, high availability is not standard with RDBMS, although each brand offers options that provide fault resilience or even fault tolerance. These options are neither inexpensive to license nor easy to understand and implement. To ensure that Oracle is always available requires RAC (Real Application Clusters for server failover) and/or Data Guard (for data replication). RAC will add over 48 percent to the cost of your Oracle license; Data Guard, over 21 percent[1].

Furthermore, to install and configure RAC or Data Guard properly is not simple or intuitive, but instead requires specialized expertise about Oracle as well as your operating system. We were willing to pay this price for transactional systems because our businesses depended on them to operate. When the DSS was considered a “downstream” system, we didn’t necessarily need it to be available all the time. For many businesses today, however, decision support is a mainstream system that is needed 24/7.

Variety is perhaps the biggest “Big Data” challenge and the primary reason it’s poorly suited for RDBMS. The many formats of Big Data can be broadly categorized as structured, semi-structured, or unstructured. Most data about a product return and some data about a customer service call could be considered structured and is readily stored in a relational table. For the most part, however, Big Data is semi-structured (such as server log files or likes on a Facebook page) or completely unstructured (such as surveillance video or product-related articles, reviews, comments, or tweets). These data types do not fit neatly—if at all—into tables made up of fields that are rigidly typed (for example, six-digit integer, floating point number, fixed- or variable-length character string of exactly X or no more than Y characters, and so on) and often come with constraints (for example, range checks or foreign key lookups).

Like high availability, high performance is an option for an RDBMS, and vendors have attempted to address this with features that enable partitioning, caching, and parallelization. To take advantage of these features, we have to license these software options and also purchase high-end (that is, expensive) hardware to run it on—full of disks, controllers, memory, and CPUs. We then have to configure the database and the application to take advantage of components such as data partitions, memory caches and/or parallel loads, parallel joins/selects, and parallel updates.

A New Approach

In December of 2004, Google published a paper on MapReduce, which was a method it devised to store data across hundreds or even thousands of servers, then use the power of each of those servers as worker nodes to “map” its own local data and pass along the results to a master node that would “reduce” the result sets to formulate an answer to the question or problem posed. This allowed a “Google-like” problem (such as which servers across the entire Internet have content related to a particular subject and which of those are visited most often) to be answered in near real time using a divide-and-conquer approach that is both massively parallel and infinitely scalable.

Yahoo! used this MapReduce framework with its distributed file system (which grew to nearly 50,000 servers) to handle Internet searches and the required indexing of millions of websites and billions of associated documents. Doug Cutting, who led these efforts at Yahoo!, contributed this work to the open source community by creating the Apache Hadoop project, which he named for his son’s toy elephant. Hadoop has been used by Google and Yahoo! as well as Facebook to process over 300 petabytes of data. In recent years, Hadoop has been embraced by more and more companies for the analysis of more massive and more diverse data sets.

Data is stored in the Hadoop Distributed File System (HDFS) in its raw form. There is no need to normalize (or denormalize) the data, nor to transform it to fit a fixed schema, as there is with RDBMS. Hadoop requires no data schema—and no index schema. There is no need to create indexes, which often have to be dropped and then recreated after data loads in order to accelerate performance. The common but cumbersome practice of breaking large fact tables into data partitions is also unnecessary in Hadoop because HDFS does that by default. All of your data can be readily stored in Hadoop regardless of its volume (inexpensive, commodity disk drives are the norm), velocity (there is no transformation process to slow things down), or variety (there is no schema to conform to).

As for availability and performance, Hadoop was designed from the beginning to be fault tolerant and massively parallel. Data is always replicated on three separate servers, and if a node is unavailable or merely slow, one of the other nodes takes over processing that data set. Servers that recover or new servers that are added are automatically registered with the system and immediately leveraged for storage and processing. High availability and high performance is “baked in” without the need for any additional work, optional software, or high-end hardware.

Although getting data into Hadoop is remarkably straightforward, getting it out is not as simple as with RDBMS. Data in Hadoop is accessed by MapReduce routines that can be written in Java, Python, or Ruby, for example. This requires significantly more work than writing a SQL query. A scripting language called Pig, which is part of the Apache Hadoop project, can be used to eliminate some of the complexity of a programming language such as Java. However, even Pig is not as easy to learn and use as SQL.

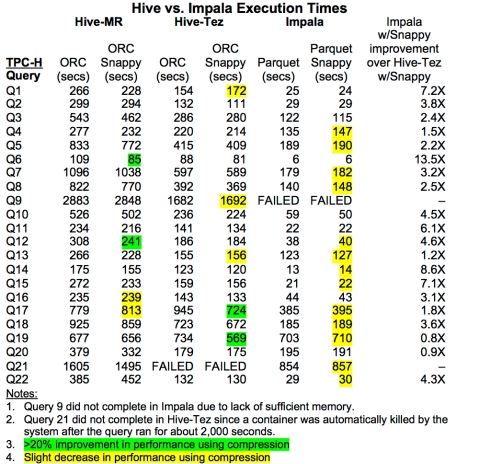

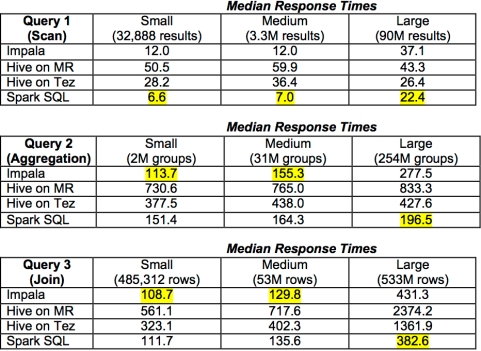

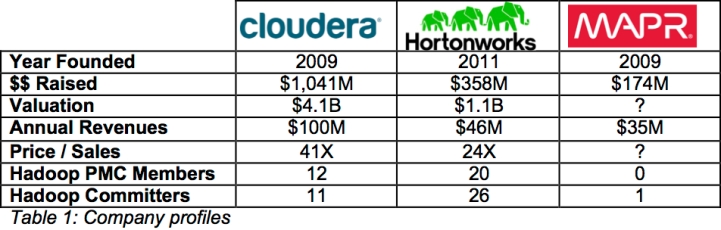

Hive is another tool within the Apache Hadoop project that allows developers to build a metadata layer on top of Hadoop (called “HCatalog”) and then access data using a SQL-like interface (called “HiveQL”). In addition to these open source tools, several commercial products can simplify data access in Hadoop. I expect many more products to come from both the open source and commercial worlds to ease or eliminate the complexity inherent in MapReduce, which is currently the biggest inhibitor to Hadoop adoption. One that bears watching is a tool called “Impala,” which is being developed by Cloudera and allows you to run SQL queries against Hadoop in real time. Unlike Pig and Hive, which must be compiled into MapReduce routines and then run in “batch mode,” Impala runs interactively and directly with the data in Hadoop so that query results begin to return immediately.

Conclusion

Relational database Management Systems (RDBMS) have been around for more than 30 years and have proven to be a far better way to handle data than their predecessors. They are particularly well suited for transactional systems, which quickly and rightfully made them a standard for the type of data processing that was prevalent in the 1980s and 1990s. Decades ago, when BI / DW / Analytical systems became common, we found ways to adapt RDBMS for these systems. However, these adaptations were unnatural in terms of the relational model, and inefficient in terms of the data staging and transformation processes they created. We tolerated this because it achieved acceptable results—for the most part. Besides, what other option did we have?

When companies such as Google, Yahoo!, and Facebook found that relational databases were simply unable to handle the massive volumes of data they have to deal with—and necessity being the mother of invention—a new and better way was developed to put data in context (after all, data in context is information; information in context is knowledge). In this age of Big Data, more companies must now deal with data that not only comes in much higher volumes, but also at much faster velocity and in much greater variety.

Relational databases are no longer the only game in town, and for analytical systems (versus transactional systems), they are no longer the best available option.

About the Author

David Teplow began using Oracle with version 2 in 1981, and has worked as a consultant for Database Technologies (1986-1999) and Integra Technology Consulting (2000-present). He can be reached at: DTeplow@IntegraTC.com

[1] Per Processor License costs from the Oracle Technology Global Price List dated July 19, 2012.